CDS workflow functionality

Koen Hufkens

2024-08-19

Source:vignettes/cds_workflow_vignette.Rmd

cds_workflow_vignette.RmdRunning workflows on Copernicus’ Climate Data Store

Copernicus.eu provides a set of interesting data sets for research, education, and applied earth sciences on their Climate Data Store (CDS). However, in many cases through the conventional API you can only download the raw data at their native temporal resolution.

Many people however don’t require hourly or 6-hourly data for their research purposes. Using the standard API therefore results in queries of large amount of data which then need to be temporally down-sampled to get, for example, daily mean temperature values.

Many of these issues can be side stepped by using the CDS

Toolbox workflows. These allow you to execute python

code on CDS servers, as provided through an R script.

The Workflow syntax

Below we’ll use a CDS Toolbox python script to extract a time series for a variable at a given (point) location. This is a common operation for people working with point (site/location) data.

On the CDS website the example script

03 Extract time series and plot graph in the toolbox editor

allows you to extract a daily mean time series for a given (defined)

variable and a given location. The code below is normally used within

the context of the CDS Toolbox Editor platform. However, we can reuse

the same code within the context of a ecmwfr workflow

request.

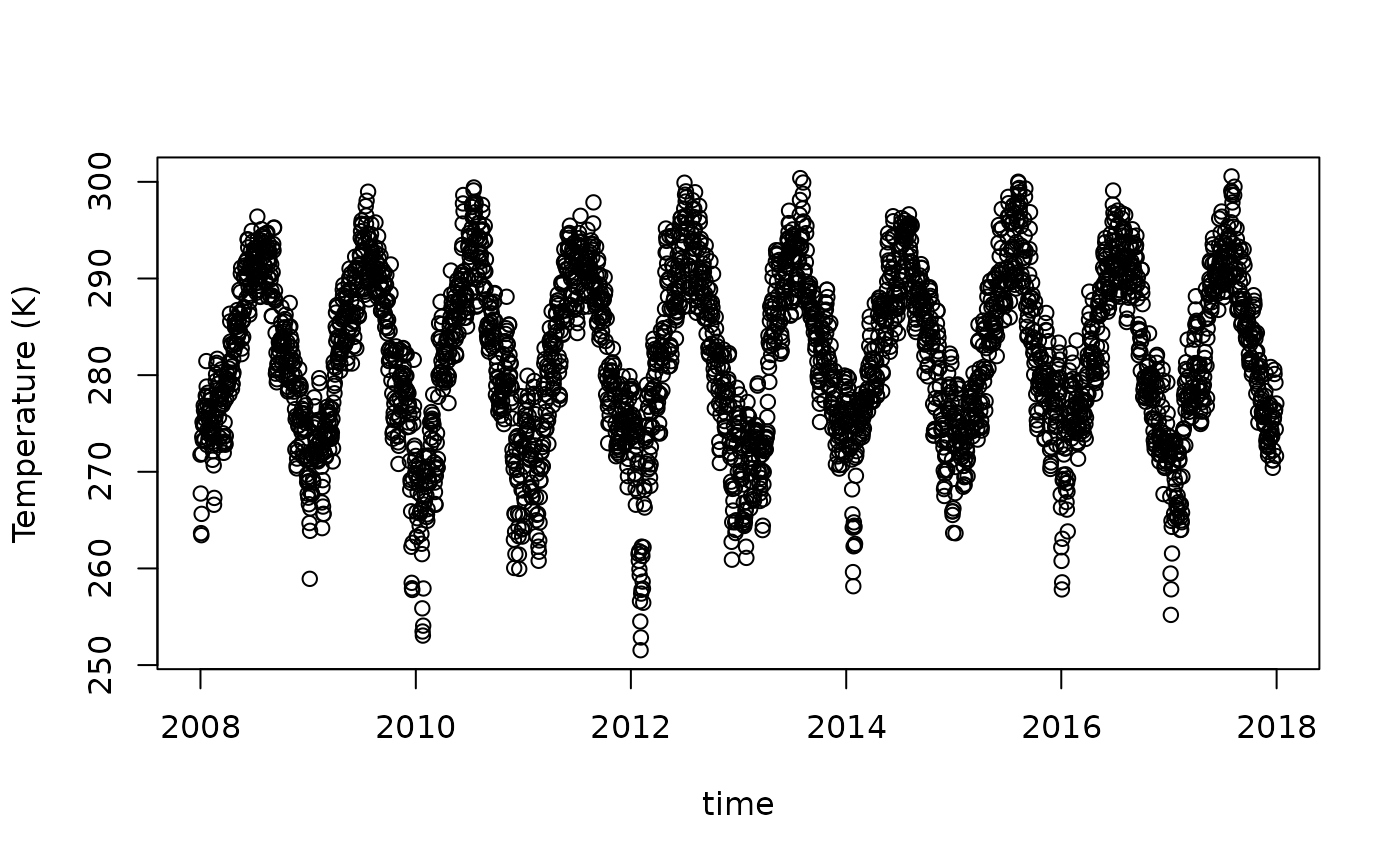

The code below lists all available variables to consider in combination with an API call defining the data ranges available (both spatial and temporal). This example is limited to the years 2008 - 2017 and 6-hourly time steps (although more data is available).

We will make one minor change to the original script which is

critical for the success of our workflow. Instead of returning a figure,

we will want to return the data on which the figure is based. We

therefore changed the last line of the original file to

return data_daily. We also set the output format from a

live figure to a download. We can then save this script as a normal

python script in any editor. For this example the data was saved into a

file called era5.py

import cdstoolbox as ct

layout = {

'input_ncols': 3,

}

variables = {

'Near-Surface Air Temperature': '2m_temperature',

'Eastward Near-Surface Wind': '10m_u_component_of_wind',

'Northward Near-Surface Wind': '10m_v_component_of_wind',

'Sea Level Pressure': 'mean_sea_level_pressure',

'Sea Surface Temperature': 'sea_surface_temperature',

}

@ct.application(title='Extract a time series and plot graph', layout=layout)

@ct.input.dropdown('var', label='Variable', values=variables.keys(), description='Sample variables')

@ct.input.text('lon', label='Longitude', type=float, default=75., description='Decimal degrees')

@ct.input.text('lat', label='Latitude', type=float, default=43., description='Decimal degrees')

# @ct.output.livefigure() # Disable live figure!

@ct.output.download() # Enable a plain download

def plot_time_series(var, lon, lat):

"""

Application main steps:

- set the application layout with 3 columns for the input and output at the bottom

- retrieve a variable over a defined time range

- select a location, defined by longitude and latitude coordinates

- compute the daily average

- show the result as a timeseries on an interactive chart

"""

# Time range

data = ct.catalogue.retrieve(

'reanalysis-era5-single-levels',

{

'variable': variables[var],

'grid': ['3', '3'],

'product_type': 'reanalysis',

'year': [

'2008', '2009', '2010',

'2011', '2012', '2013',

'2014', '2015', '2016',

'2017'

],

'month': [

'01', '02', '03', '04', '05', '06',

'07', '08', '09', '10', '11', '12'

],

'day': [

'01', '02', '03', '04', '05', '06',

'07', '08', '09', '10', '11', '12',

'13', '14', '15', '16', '17', '18',

'19', '20', '21', '22', '23', '24',

'25', '26', '27', '28', '29', '30',

'31'

],

'time': ['00:00', '06:00', '12:00', '18:00'],

}

)

# Location selection

# Extract the closest point to selected lon/lat (no interpolation).

# If wrong number is set for latitude, the closest available one is chosen:

# e.g. if lat = 4000 -> lat = 90.

# If wrong number is set for longitude, first a wrap in [-180, 180] is made,

# then the closest one present is chosen:

# e.g. if lon = 200 -> lon = -160.

data_sel = ct.geo.extract_point(data, lon=lon, lat=lat)

# Daily mean on selection

data_daily = ct.climate.daily_mean(data_sel)

fig = ct.chart.line(data_daily)

#return fig

return data_dailyWith the script adjusted and saved we can now use it in our

ecmwfr workflow run as follows.

First we read in the python script as a long string into R. Use

readlines() and collapse the various lines using the

appropriate \n separator.

We then have to formulate a query which sets the right variables in

the python script, mainly the parameters var,

lat and lon. The request is a nested list with

a code argument, containing the code you want to run, a

kwargs variable containing a named list of variables to

forward to the code, a workflow_name defining the python

application you want to call (in this case

plot_time_series, and a target output variable

name setting the filename of the resulting output.

With a correct query specified we can now run this workflow request

using a normal wf_request() call, which will run the code

on the CDS server and return the result to the target file.

# A query for 2m surface temperature

request = list(

code = code,

kwargs = list(

var = "2m_temperature",

lat = 50,

lon = 20

),

workflow_name = "plot_time_series", # name of the python subroutine / app

target = "test.nc"

)

# download the data

file <- wf_request(

user = USER_ID,

request = request,

path = tempdir()

)Once the file is downloaded we can open this file using the

ncdf4 library. Depending on the python script used you will

be returned either netCDF formatted geospatial data

(i.e. maps) or non-geospatial data. In this case the returned data is

not geospatial, a time-series for our desired location.

Below we open the netCDF file and read in the

temperature (t2m) data into variable t.

# open the netcdf file and print the meta-data

f <- ncdf4::nc_open(file.path(tempdir(),"test.nc"))

print(f)

# read in the temperature data stored in field "t2m"

# and the dates from the "time" field

temp <- ncdf4::ncvar_get(nc = f, "t2m")

time <- ncdf4::ncvar_get(nc = f, "time")

# get the starting point of the time series

# and add the increments (time)

start <- ncdf4::ncatt_get(f, "time")$units

start <- as.Date(start, format = "days since %Y-%m-%d 00:00:00")

time <- start + time

# close the file once done

ncdf4::nc_close(f)We can now plot this data

plot(time, temp, ylab = "Temperature (K)")